This is the second of two articles about haptic design and provides an overview of human sensory systems and the technology created to manipulate those senses. The first article on Haptic Design Principles was published here.

Beyond the Buzz

Though you may not realize it, you’ve probably already encountered haptic technology through your smartphone, watch, or gaming device. When information is communicated to you through your sense of touch, whether it’s the rumble of a game controller at the approach of an enemy vehicle or the buzz of an incoming message on your wrist, that’s a designed haptic experience. Today’s consumer haptics are mostly limited to vibration, but the potential for tactile experiences is far more rich and complex.

The Sense of Touch

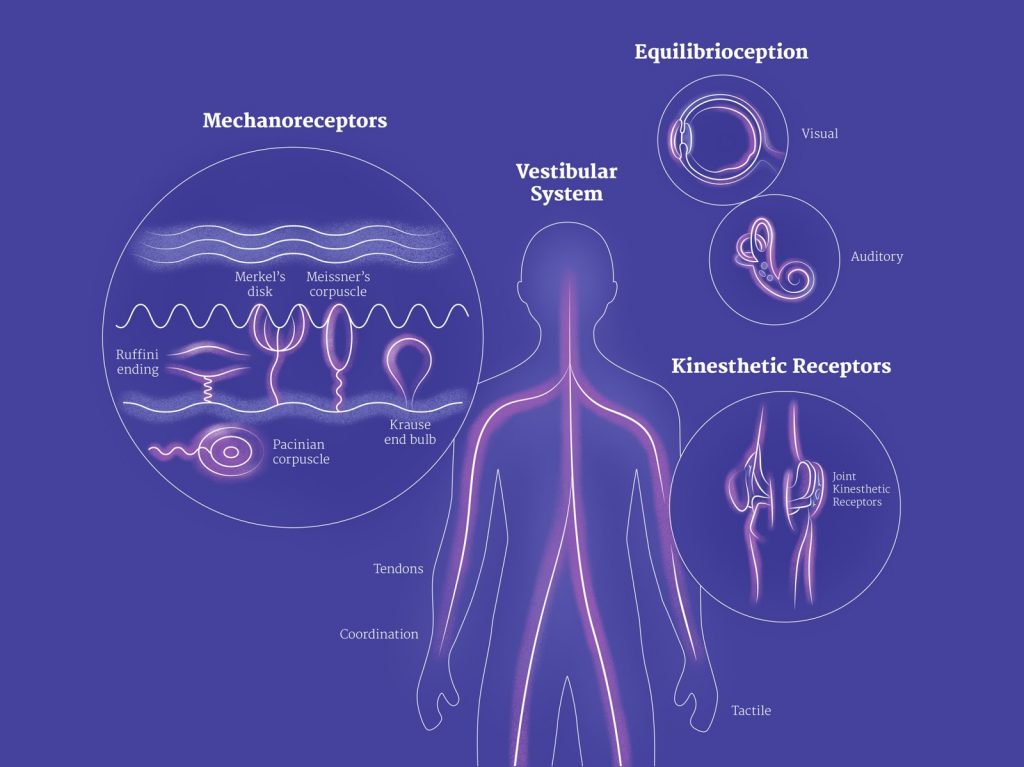

What we casually call the “sense of touch” is, in actuality, a group of interconnected sensory systems. These include a variety of mechanoreceptors in the skin that sense pressure, vibration, and texture; distinct thermoreceptors that separately detect heat and cold; specialized nociceptors that identify the mechanical, thermal, and chemical damage we perceive as pain; as well as proprioceptors in the skin, muscles, and joints that track motion, stretch, and force. There are even specific neural pathways that are directly linked to the haptic communication of emotion. Compared to vision or hearing, our sense of touch is incredibly layered and complex, which can make it difficult to replicate and manipulate digitally.

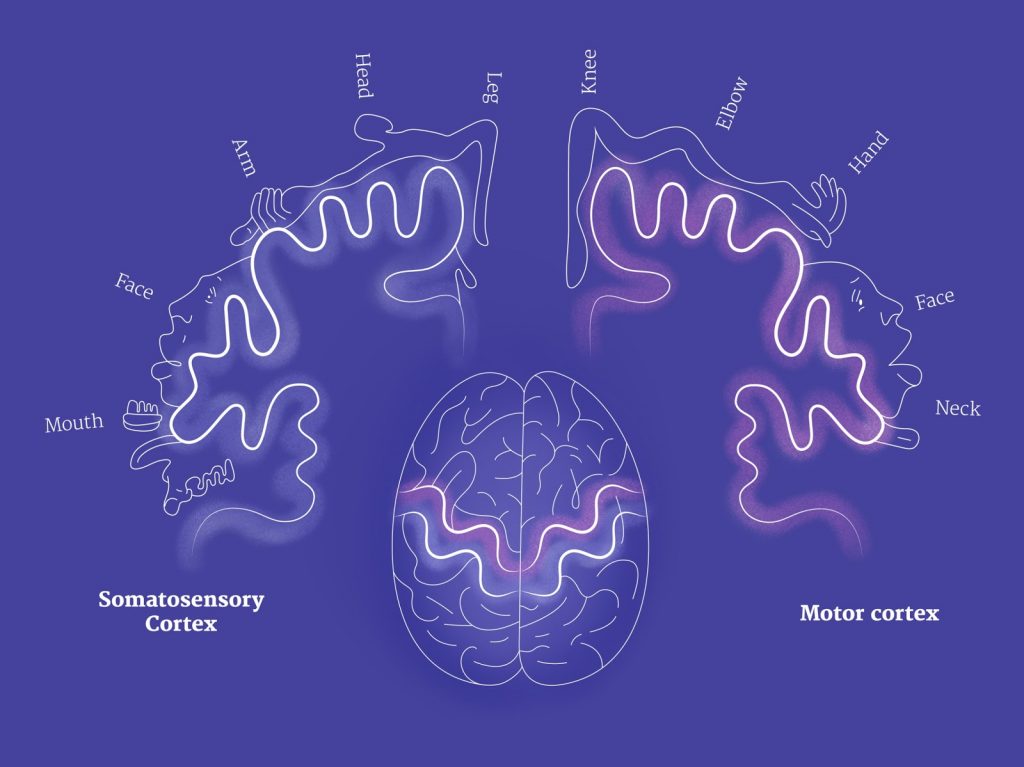

And unlike vision, which can be quite passive, our sense of touch is much more inherently active and bidirectional. When we want to feel something, we reach out to touch it. We perform practiced movements and gestures to sense texture, shape, and weight. And in our brains, our motor cortex, which coordinates body movement, and our somatosensory cortex, which processes tactile information, are immediately adjacent with parallel structures and countless connections. Our physical actions and tactile sensations are deeply intertwined. For digital haptic experiences, this means inputs and outputs must be tightly integrated with minimal lag, and it’s why low-latency 5G connectivity is such a highly anticipated game changer.

What’s more, haptic experiences can’t be designed solely on their own. As we move through life, we build mental models of our world by integrating all of our sensory inputs. And where there are gaps, our brains work hard to fill in the blanks. Our perception of the world is a fusion of sensory information and powerful extrapolation. To create a truly great immersive experience, the full range of visual, audio, and haptic channels all need to be considered together.

The Tech of Touch

It’s important to understand the sensory systems in our bodies to effectively design for touch, but it’s equally important to understand the technology created to manipulate those senses. The specific technology powering tactile interfaces is also entering an exciting phase of growth. According to Immersion Corporation, a pioneer and expert in haptic technology powering over 3 billion devices worldwide, they expect a variety of haptic modalities will become increasingly relevant to the design of consumer experiences in the near future. We see these modalities including:

Vibrotactile

The vast majority of haptic experiences are vibration-based. Eccentric rotating mass (ERM) and linear resonant actuators (LRA) drive much of the haptics in today’s smartphones and wearable devices, but offer designers little to no control of frequency, a key parameter of vibrotactile perception. Newer HD actuators, like those in the Nintendo Switch Joy-Con controllers, are enabling greater vibration range and layered haptic patterns with distinct amplitude and frequency control.

Buttons

Another emerging application of vibration is button simulation. All around us, on our devices, cars, and appliances, physical buttons are being replaced by touchscreens. Most screens lack the critical tactile affordances that make buttons so simple and easy to use, but with a combination of haptic and audio feedback, tapping a touch surface, like the Apple Force Touch trackpad, can be made to match the feeling of a mechanical button so precisely that most people would have trouble telling the difference.

Surfaces

Touchscreens and other surfaces are also being enhanced with more complex programmable textural effects and surface haptics. Companies like Tanvas and Hap2U are using electrostatic charge and ultrasonic vibrations to apply forces on the fingertip as it contacts the surface, creating a variety of tactile sensations

Thermal

Temperature elements, like those in the Embr Wave bracelet, use thermoelectric effects in which current flowing across two different conductors heats one side and cools the other, making the device feel warm or cool to the touch. Perceived temperature can be adjusted over about a 10°F range within just a few seconds.

Kinesthetic

Body-mounted force feedback devices can provide augmented assistance or resistance, and create the illusion of movement, shape, and mass. These experiences require grounding (something to push against) and a lot of power, so they’ve traditionally been too bulky for practical use, but recent devices like the Dexmo haptic glove and Seismic robotic clothing demonstrate ongoing progress in this area.

Contactless

Most haptic feedback requires direct contact with the body, but some technologies can deliver tactile experiences through the air. This can be achieved through directed air currents or, as with Ultraleap, through focused ultrasonic waves that create surface sensations and volumetric object rendering in midair over an ultrasound emitter.

Neural & Cortical

Finally, research is also being done on neural and cortical interfaces for tactile perception and motor control. The complexity and adaptability of our sense of touch make these experiences difficult to replicate and unstable over time, but research on brain-computer interfaces and neuroprosthetics could lead to the haptic equivalent of the cochlear implant as well as more extreme human sensory augmentation.

A Feel for Haptic Design

With a foundational understanding of these technical modalities, product designers must apply the necessary human-centered research and design process to leverage these haptic interactions in ways that add delight and value to future consumer experiences. Technology alone will not transform the future. It is only when strategic product design harnesses the power of technology to meet real human needs true innovation is achieved.

To learn the Design Principles for Haptic Design, see Article 1 in this series.

A Punchcut Perspective

Nate Cox, Punchcut | David Birnbaum, Immersion Corp | Graphics: Paige Cameron, Punchcut

© Punchcut LLC, All rights reserved.